The AI Visualization Paradox: How Effortless Learning Creates a Digital Grave

I’ve noticed something interesting lately. With the release of new visual AI models like Nano Banana PRO, people have found countless useful applications. One particularly popular use case: transforming complex podcasts, articles, videos, books, and blogs into visual interpretations and cheat sheets.

From my experience, this approach has both significant benefits and hidden drawbacks.

When AI Visualization Works

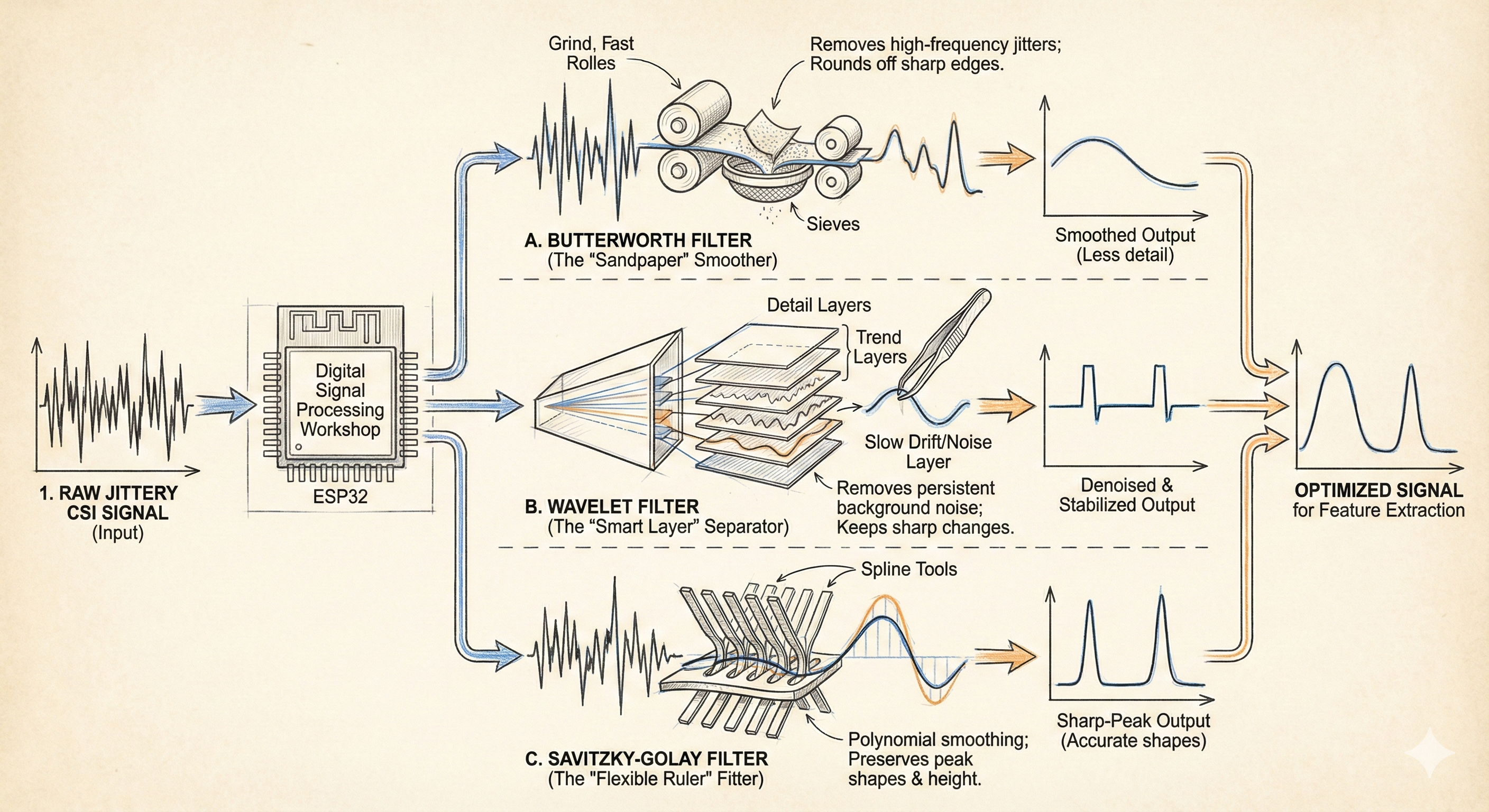

Visual explanations genuinely help with understanding. For example, when I needed to understand how different filters work on WiFi CSI waves in one of my projects, an AI-generated illustration made the concept click immediately. The filters, signal processing, and data flow became crystal clear through visualization.

My AI-generated visualization explaining WiFi CSI signal filters in the ESPectre project

These AI-generated diagrams and summaries do work — when we actually use them.

The Hidden Problem

But here’s where things get interesting. When we ask an AI model to transform complex content into simple, “understandable” visualizations, we — as the customers of this action — spend almost zero effort.

Consequently, we get a beautiful and useful image that’s supposedly designed to help us. And this is where, in my opinion, the problem emerges.

The Instant Dopamine Trap

Our brain, having spent almost zero effort, doesn’t value the obtained material and shelves it for later. We get a high-quality visualization of complex material, but instead of becoming a learning aid, it transforms into a dusty piece of information in our digital grave, never to be reviewed again.

This raises a fundamental problem: by creating these simplifications, we get instant dopamine. We spent very little effort but received immense benefit (or so it seems). After getting this quick and easy dopamine hit, the brain stops caring about the problem and dismisses it.

The Paradox

A paradoxical situation emerges: a tool designed to simplify understanding and immerse a person deeper into a topic actually distances them from it, relegating these useful simplified visualizations to a huge digital graveyard.

Think about it:

- How many “summarized” articles are sitting unread in your notes?

- How many AI-generated diagrams have you created but never looked at again?

- How many “quick explanation” videos are bookmarked but unwatched?

The easier it is to obtain knowledge, the less we value it. The less we value it, the less likely we are to internalize it.

My Solution: Manual Processing

I’ve developed an approach to combat this problem for information that’s truly important to me.

The Three-Step Filter

Step 1: Scan with AI

- Use LLM to quickly scan the piece of information

- Maybe create a visualization

- Look at quick conclusions

- Evaluate: Is this information important to me? Do I want to dive deeper? Is it evergreen? Will I use it in the future?

Step 2: Consult, Don’t Delegate

If the information is genuinely useful and needed, I consult with the LLM (note: consult, not ask it to do it for me). I engage in a dialogue, challenge assumptions, ask for alternative perspectives.

Step 3: Manual Capture

Then I manually write or sketch the key insights into a dedicated notebook. By hand. With effort.

Why This Works

The manual processing forces my brain to:

- Engage actively with the material

- Filter what’s truly important

- Reorganize information in my own mental model

- Value the knowledge through invested effort

The physical act of writing or drawing creates neural pathways that passive consumption never will.

Example from my sketchpad where I manually broke down a topic.

Yes, hello to my not-so-great drawing skills haha.

The Uncomfortable Truth

AI tools are incredibly powerful for learning — but only if we use them as assistants, not replacements for thinking.

The question isn’t “Can AI help me learn faster?” It’s “Am I using AI in a way that actually helps me learn, or just makes me feel productive?”

Effortless knowledge acquisition feels productive. It triggers dopamine. But retention requires effort, and effort requires intention.

Finding Balance

I’m not saying never use AI visualizations. They’re incredibly valuable. But:

- Use AI to accelerate understanding, not replace it

- If it’s important, engage manually

- Dopamine from creation ≠ actual learning

- Your digital grave is full — be selective

The goal isn’t to reject AI tools. It’s to use them consciously, understanding that the effort we invest directly correlates to the value we extract.

What’s your experience with AI-generated learning materials? Do they end up in your digital grave, or have you found ways to make them stick?